Thank you! Your submission has been received!

Oops! Something went wrong while submitting the form.

Subscribe to our monthly newsletter

Copyright © 2025. All rights reserved by Centaur.ai

Blog

At Centaur Labs, we believe that great AI begins with great data. In medical imaging, that means honoring every pixel, every voxel, and every frame. Whether you’re a researcher training a model, a clinician validating results, or an annotator labeling anatomical structures, success hinges on clarity, consistency, and context.

This post unpacks the unsung backbone of modern imaging—DICOM—and explores how our segmentation tools inside the Centaur DICOM Viewer empower high-fidelity annotation at scale.

DICOM (Digital Imaging and Communications in Medicine) is more than a file format—it’s the universal language of medical imaging. Every CT scan, MRI, and ultrasound image comes wrapped in a DICOM container filled with metadata. This includes:

These tags may seem technical, but they’re critical. Miss a slice thickness? Your 3D reconstruction could misrepresent anatomy. Misread pixel spacing? Measurements could be off by millimeters. In healthcare, that margin matters.

Medical images typically fall into two categories, each requiring a different annotation mindset:

Volume-Based Imaging (e.g., CT/MR): These are 3D scans composed of thin slices, each one a voxel-sized window into anatomy. Accurate 3D reconstructions require a deep understanding of DICOM geometry.

2D Time-Based Imaging (e.g., Ultrasound): These are 2D frames recorded across time. Rather than reconstructing volume, you’re tracking motion, frame by frame. Segmentation here is always 2D, but no less meaningful.

Behind every scan is a clinical story in progress. Understanding whether you’re segmenting space or time changes how you interpret and annotate each image.

We’ve embedded our full suite of segmentation tools directly into the open-source OHIF viewer to support varied use cases and diverse users, from laptops and tablets to researchers and physicians.

Our annotation tools are purpose-built and adaptable, organized into three core categories:

Best of all, SAM-generated predictions can be refined with contour or pixel tools for maximum precision.

Different users need different tools, and Centaur’s OHIF integration respects that.

Tool interoperability is also key: you can easily start with AI, switch to manual, or jump between shapes and brushes.

While OHIF provides a strong foundation for visualization, the Centaur platform extends far beyond viewing, transforming image inspection into a full annotation pipeline with built-in quality control, feedback loops, and consensus modeling. As annotators draw segmentations or respond to classification prompts, their submissions are recorded and immediately analyzed for agreement with others.

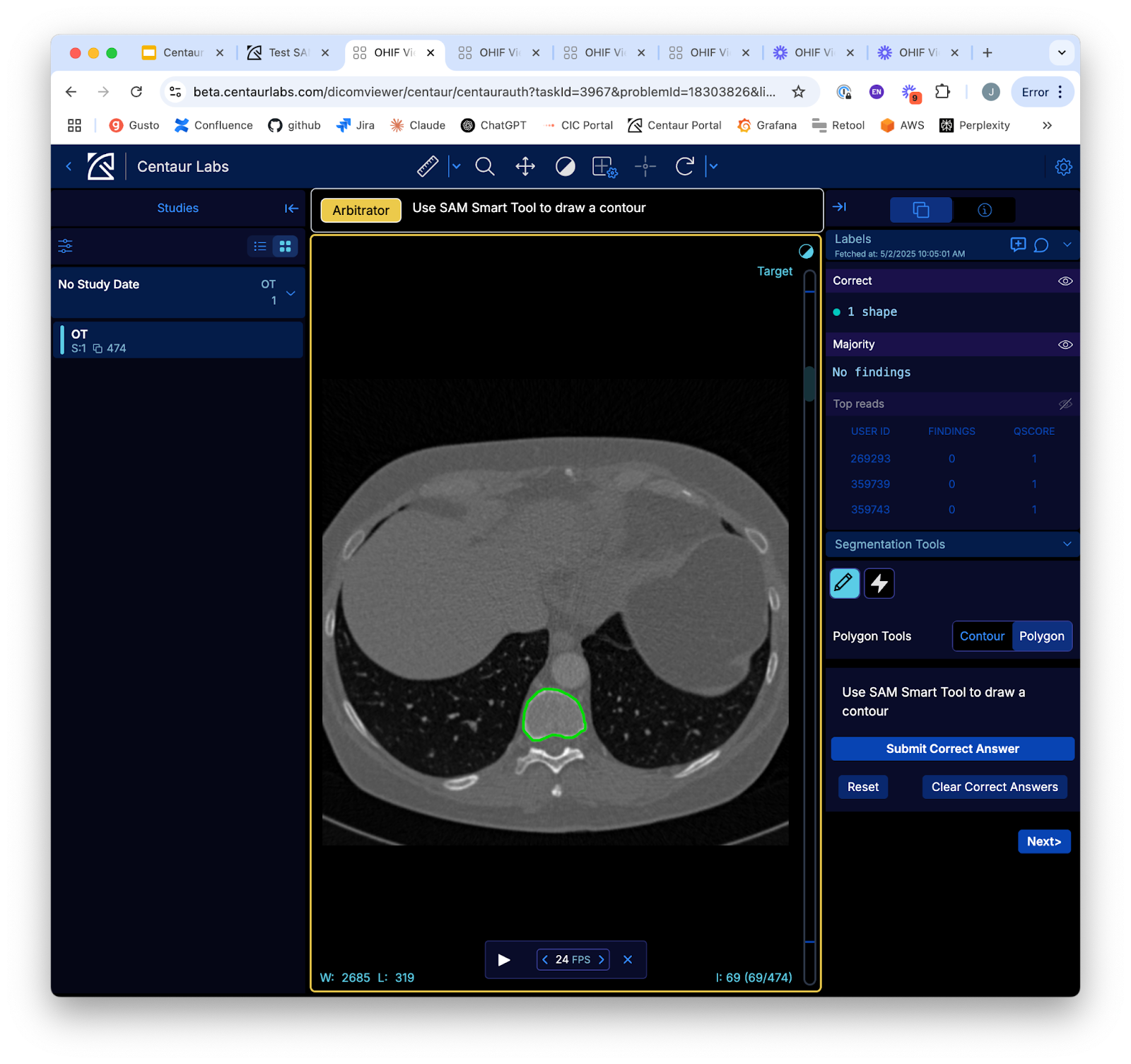

For more advanced workflows, we offer an arbitrator mode. Arbitrator mode enables power users or customers to inspect submissions from multiple annotators, compare disagreements, and create a gold-standard consensus through direct review. Once consensus is established, results are automatically compiled and available for download, structured, standardized, and ready for model training or clinical validation. This layered approach—human insight, automated scoring, and transparent arbitration—demonstrates the real power of Centaur’s platform when built on top of OHIF: not just viewing, but decision-making at scale.

We don’t just build tools—we build trust—trust in the data, trust in the process, and trust in the people behind each annotation. The insights become clinically meaningful when segmentations are precise and metadata is respected. That, in turn, powers AI systems to assist in diagnosis, tracking disease, or guiding treatment.

Every DICOM header is a data blueprint. Every annotation is a decision. And behind every decision is a human. That’s the Centaur way: combining human insight with machine efficiency to shape the future of medical AI.

If you work with medical imaging, we’d love to show you how our OHIF-based tools can accelerate your workflow without compromising quality.

For a demonstration of how we can facilitate your AI model training and evaluation with greater accuracy, scalability, and value, Schedule a demo with Centaur.ai

Radiology AI models are only as strong as their annotations. Centaur.ai engineers quality through collective intelligence, combining expert crowds, benchmarking, and performance-based incentives to produce validated data for model training and evaluation. Visit our RSNA booth to see how we make radiology AI accuracy inevitable at scale.

Medical assessments are rarely black and white. To handle the grey, we offer a rigorous, data-driven approach to QA.

Centaur.AI’ latest study tackles human bias in crowdsourced AI training data using cognitive-inspired data engineering. By applying recalibration techniques, they improved medical image classification accuracy significantly. This approach enhances AI reliability in healthcare and beyond, reducing bias and improving efficiency in machine learning model training.